|

I am a Research Scientist at NVIDIA Research, pursuing research on Adaptive Physical Intelligence, focusing on developing efficient, adaptive AI systems for vision-language-action models (VLA), world modeling, embodied reasoning, and physical AI. I received my Ph.D. from National Taiwan University (NTU) in Jul. 2023, supervised by Prof. Yu-Chiang Frank Wang. Previously, I was a research intern at NVIDIA Research (Feb. 2023-Aug. 2023), focusing on efficient model personalization and vision-language models. Also, I was a Ph.D. program researcher at ASUS AICS from Sep. 2020 to Oct. 2022, specializing in visual transfer learning. Prior to my Ph.D., I received my Bachelor's degree from Department of Electrical Engineering at National Taiwan University in 2018. |

|

|

|

My research goal is to advance Embodied and Physical AI Research, developing fast adaptive and self-evolving AI agents that seamlessly integrate dynamics, reasoning, and action in physical environments. I focus on vision-language-action models that enable intelligent agents to understand and interact with the world through multimodal reasoning, sophisticated world modeling for predictive understanding of dynamic environments, and embodied reasoning that bridges abstract cognition with physical reality. I am driven by the vision that AI should not merely process information, but should adaptively learn from and intelligently respond to the rich complexity of physical experience, ultimately creating more capable and contextually aware artificial agents. Full list of publications here. |

|

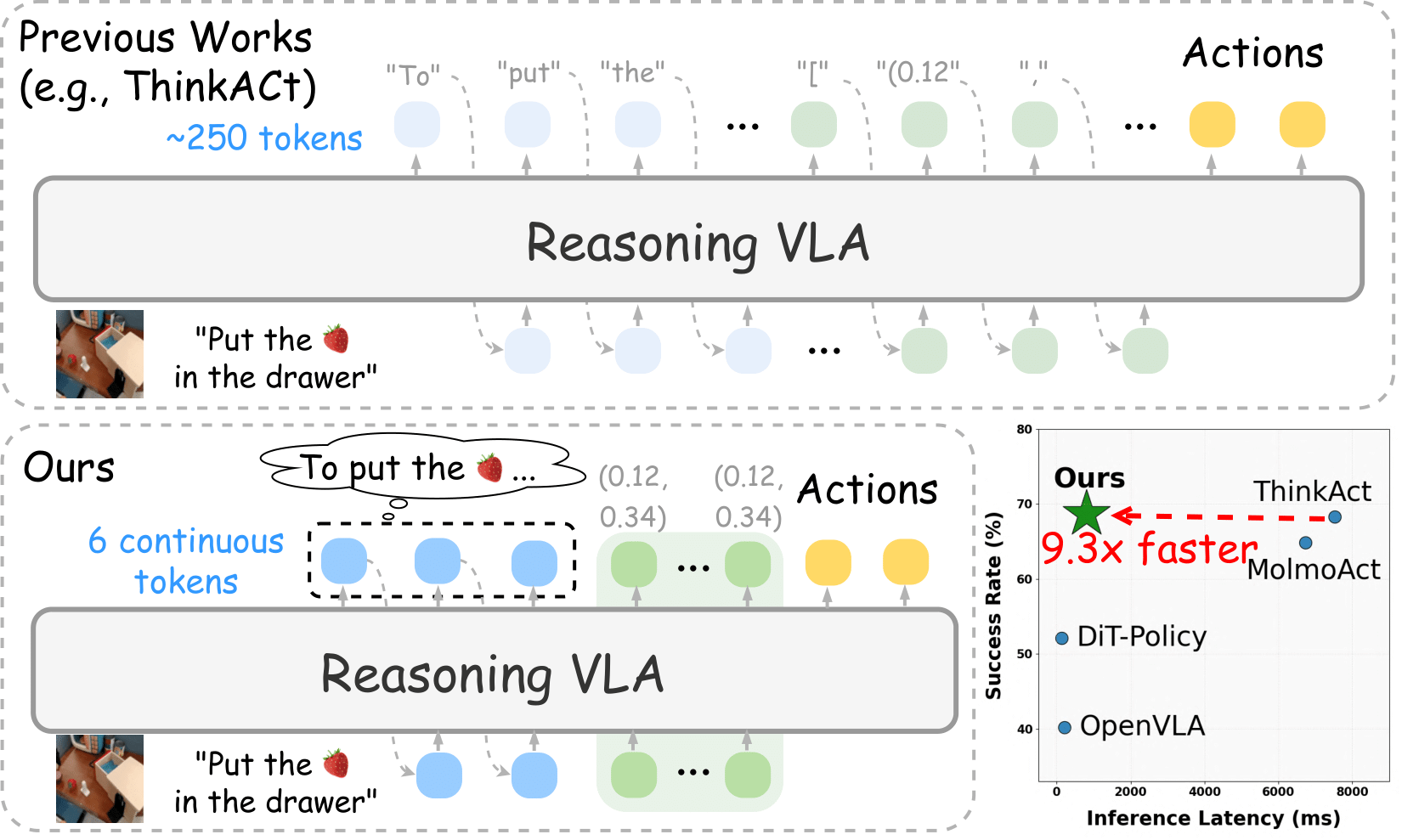

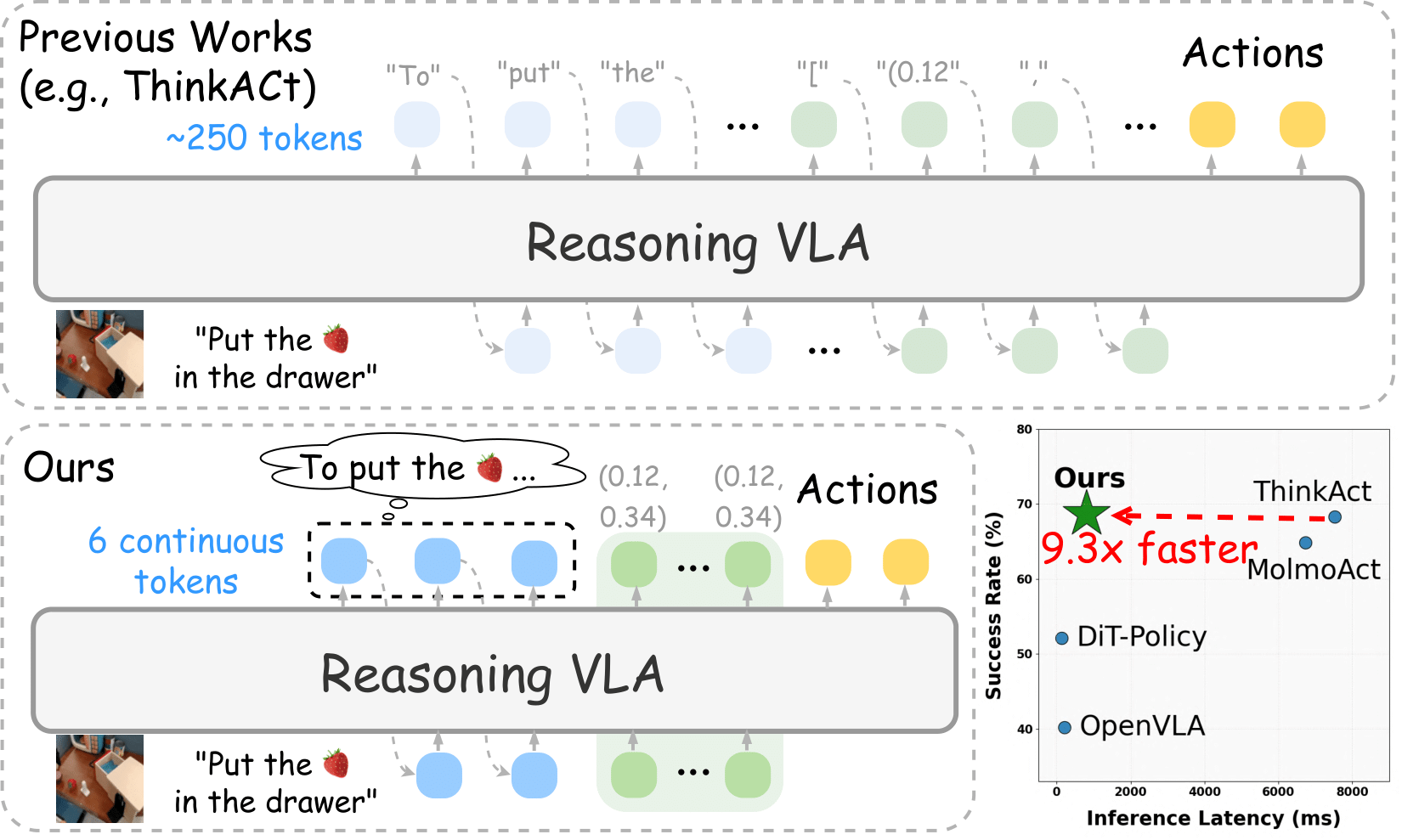

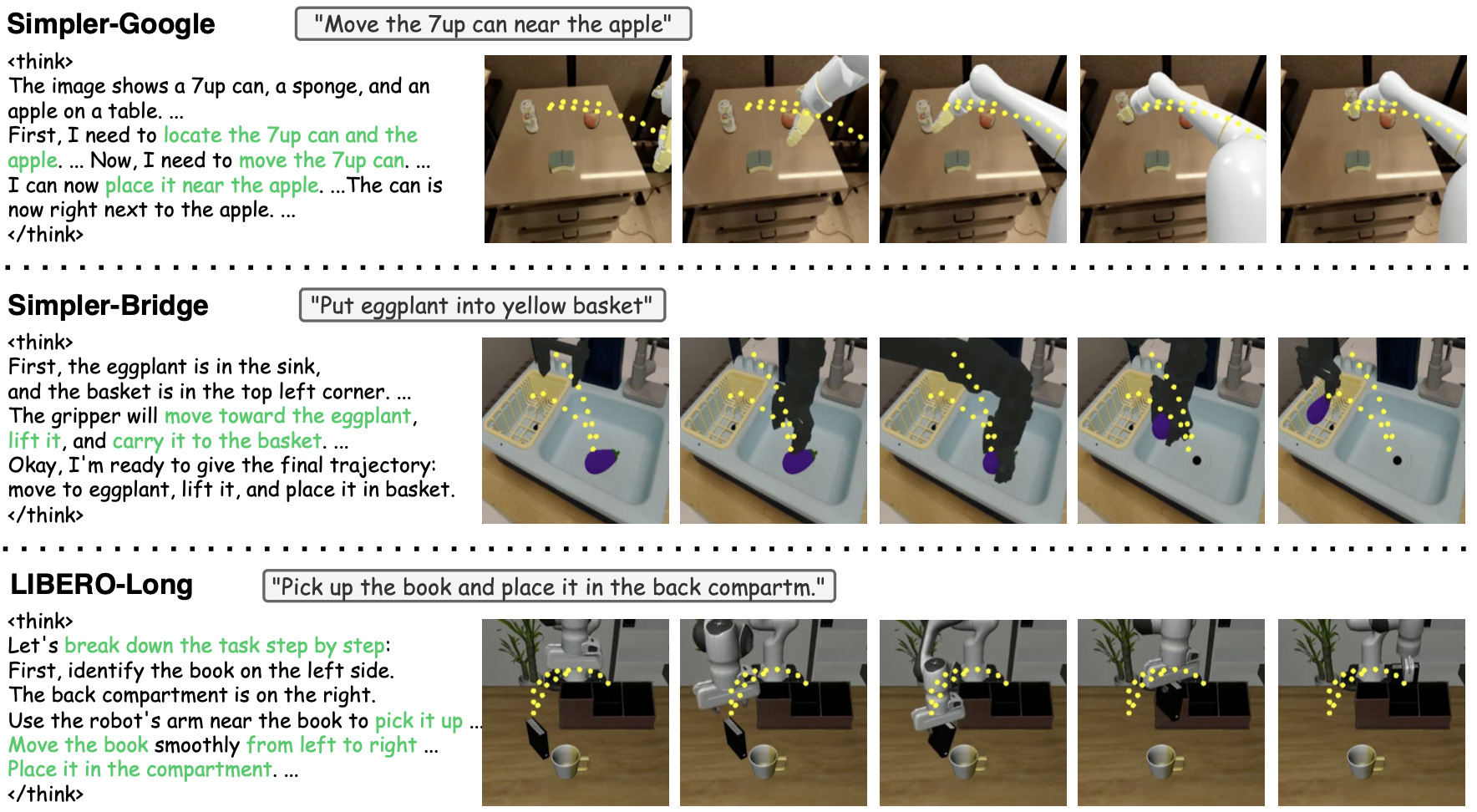

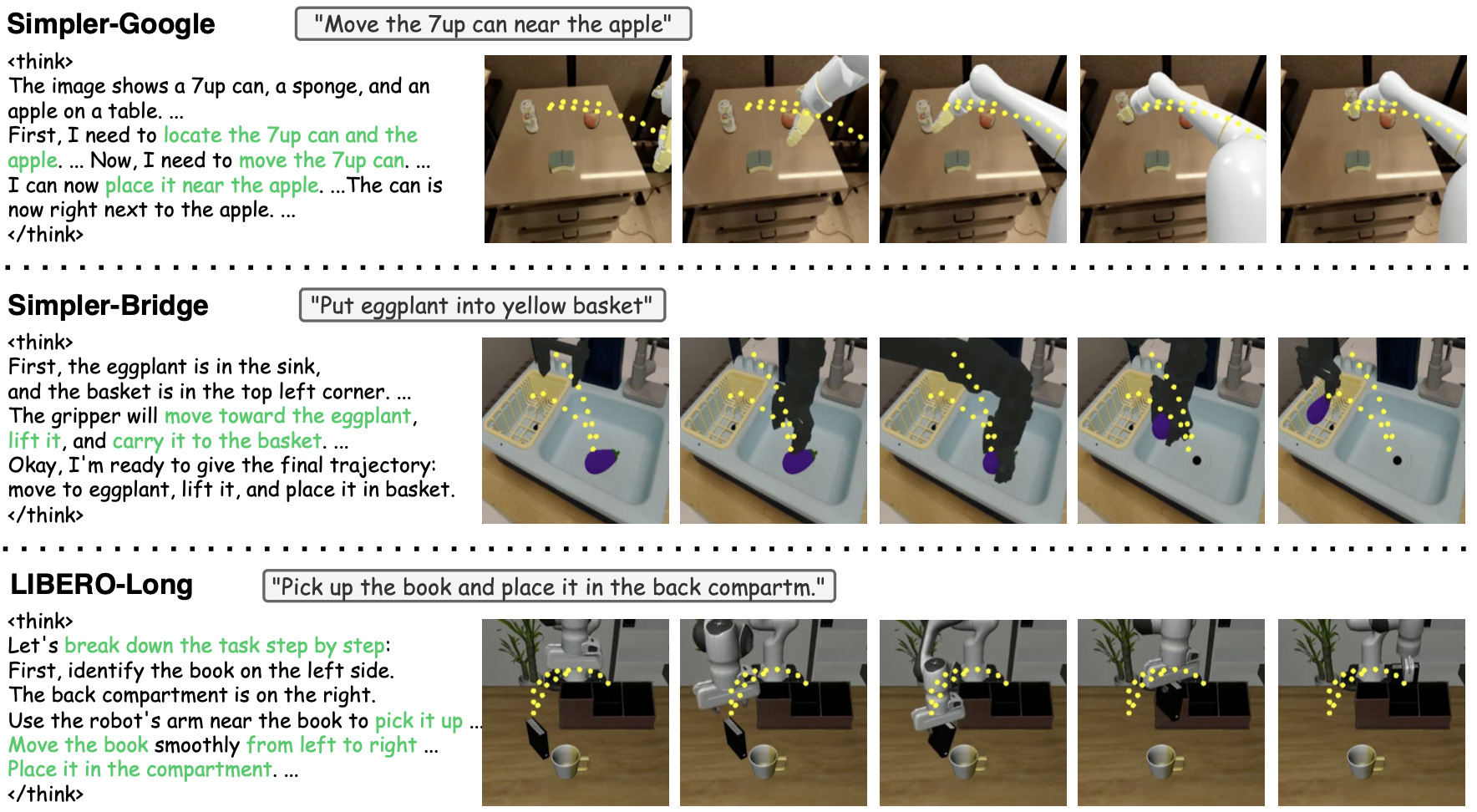

Chi-Pin Huang, Yunze Man, Zhiding Yu, Min-Hung Chen, Jan Kautz, Yu-Chiang Frank Wang, Fu-En Yang arXiv, 2026 paper / arXiv / project Fast-ThinkAct achieves efficient vision-language-action reasoning by compressing lengthy textual chain-of-thought into a few continuous latents, enabling predictable inference for real-time robotic control. It supports efficient embodied reasoning, long-horizon planning, few-shot adaptation, and robust failure recovery via compact yet expressive latent reasoning. |

|

GR00T Team NVIDIA Tech Blog, 2025 blog / code NVIDIA Isaac GR00T N1.6 is an open vision-language-action (VLA) model for generalized humanoid robot skills. This cross-embodiment model takes multimodal input, including language and images, to perform manipulation tasks in diverse environments. |

|

Chi-Pin Huang, Yueh-Hua Wu, Min-Hung Chen, Yu-Chiang Frank Wang, Fu-En Yang Neural Information Processing Systems (NeurIPS), 2025 paper / arXiv / project We introduce ThinkAct, a reasoning VLA framework capable of thinking before acting. Through reasoning reinforced by our action-aligned visual feedback, ThinkAct enables capabilities of few-shot adaptation, long-horizon planning, and self-correction in embodied tasks. |

|

Chin-Yang Lin, Cheng Sun, Fu-En Yang, Min-Hung Chen, Yen-Yu Lin, Yu-Lun Liu IEEE International Conference on Computer Vision (ICCV), 2025 paper / arXiv / project / code LongSplat reconstructs scenes from any casual long video without camera calibration and renders high-quality novel views from any point along your path. |

|

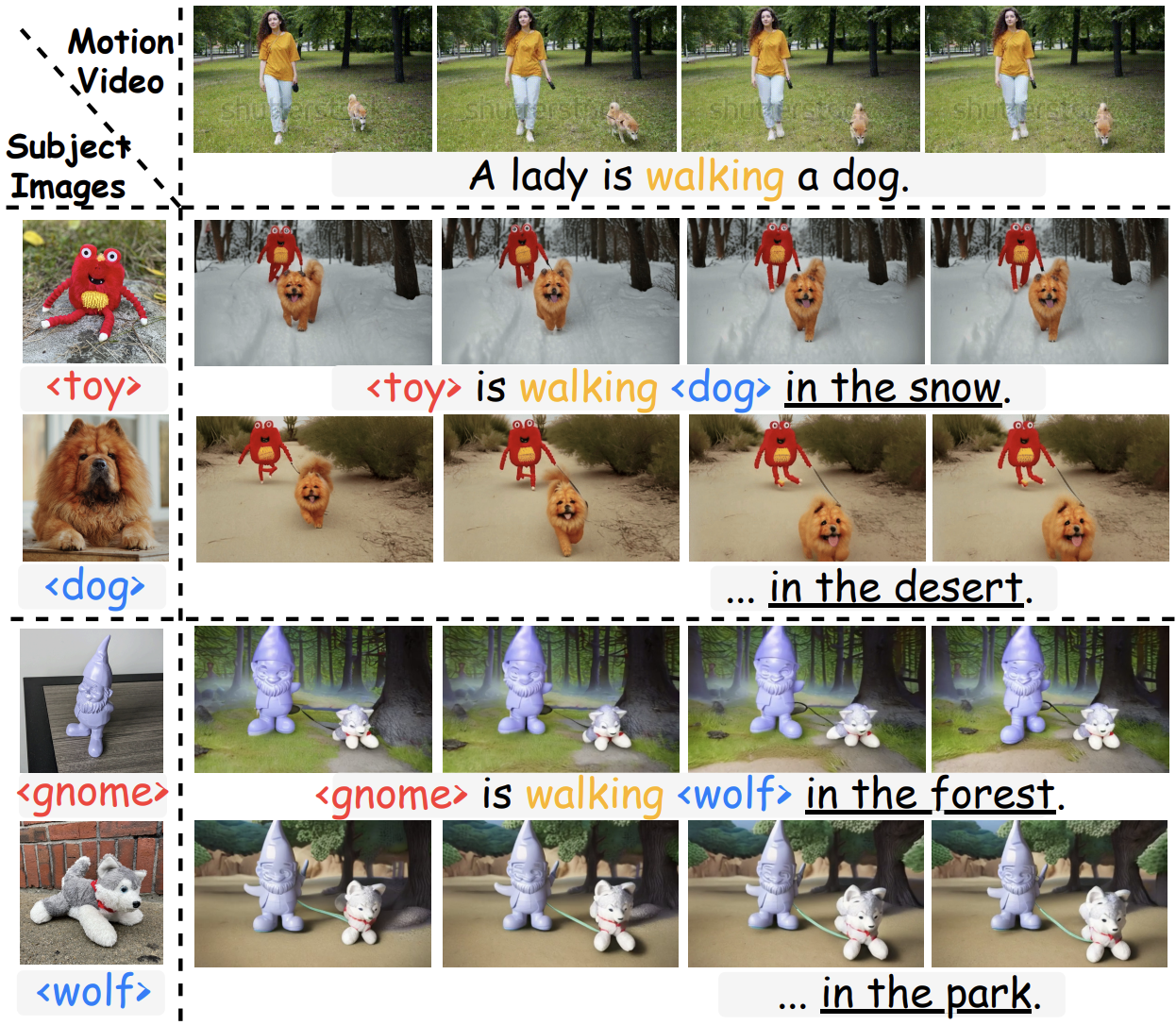

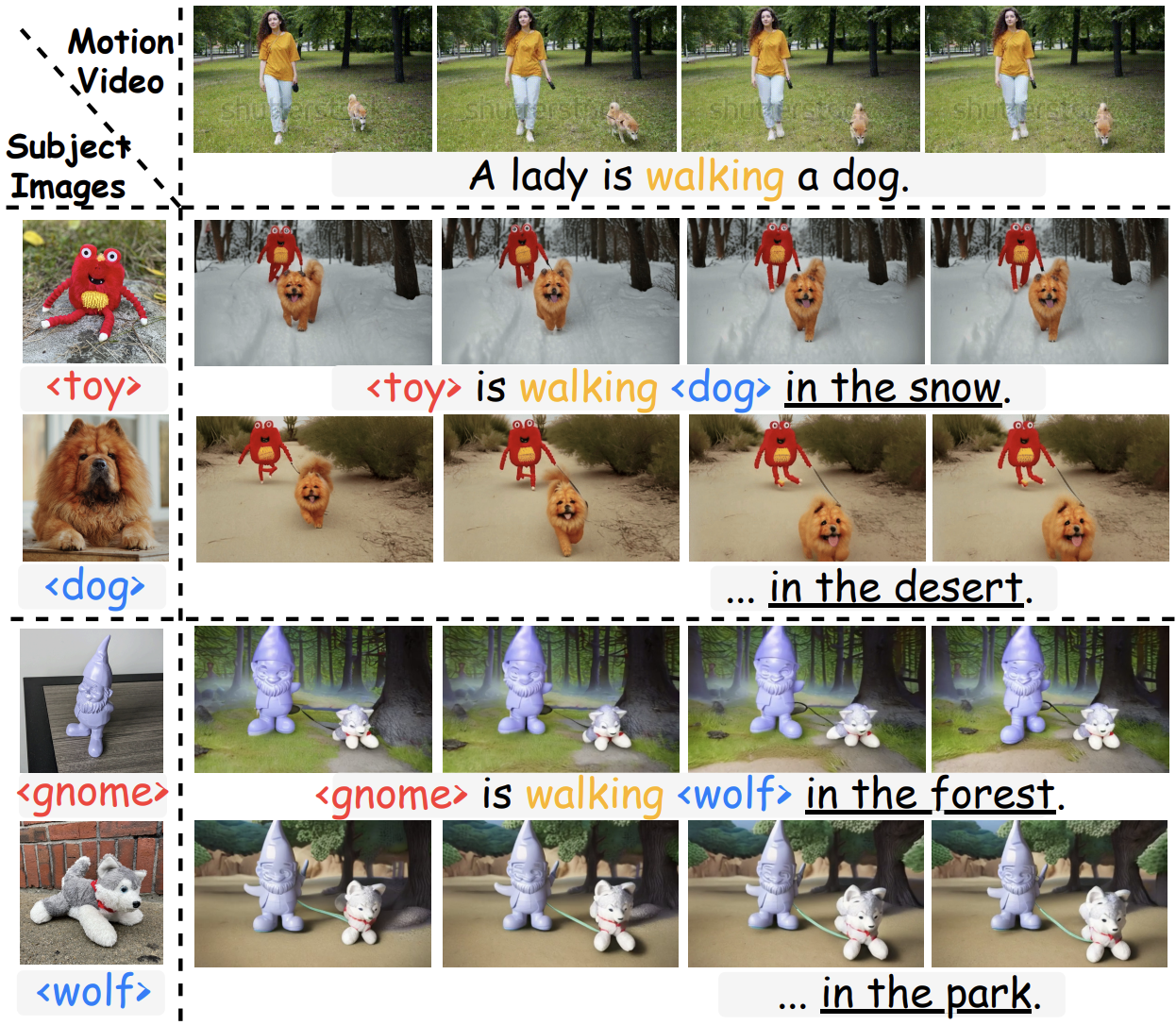

Chi-Pin Huang, Yen-Siang Wu, Hung-Kai Chung, Kai-Po Chang, Fu-En Yang, Yu-Chiang Frank Wang IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025 paper / arXiv / project VideoMage enables text-to-video diffusion models to generate coherent videos with multiple customized subjects and their distinct, controllable motion patterns through disentangled appearance–motion learning and spatial-temporal composition. |

|

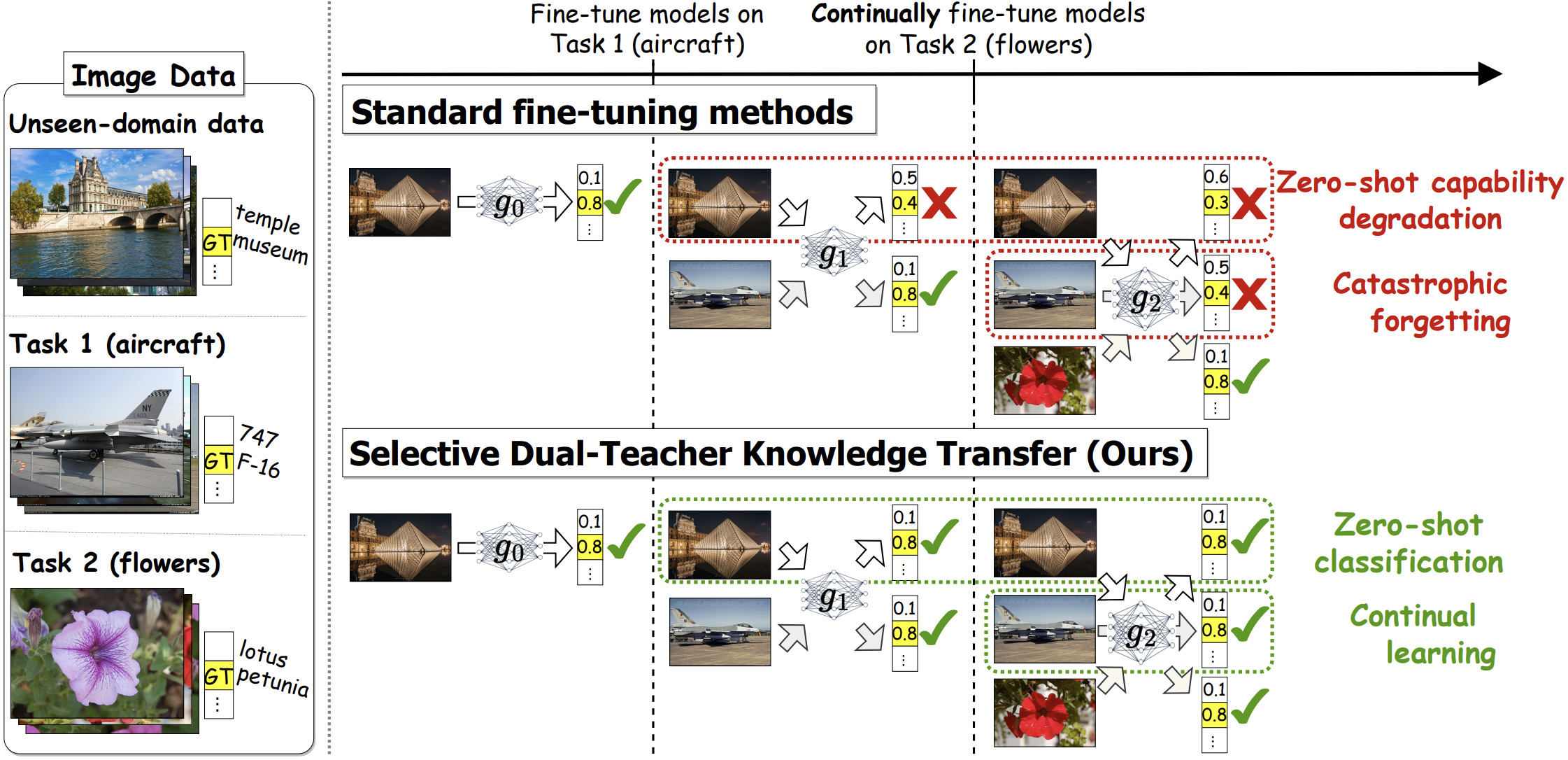

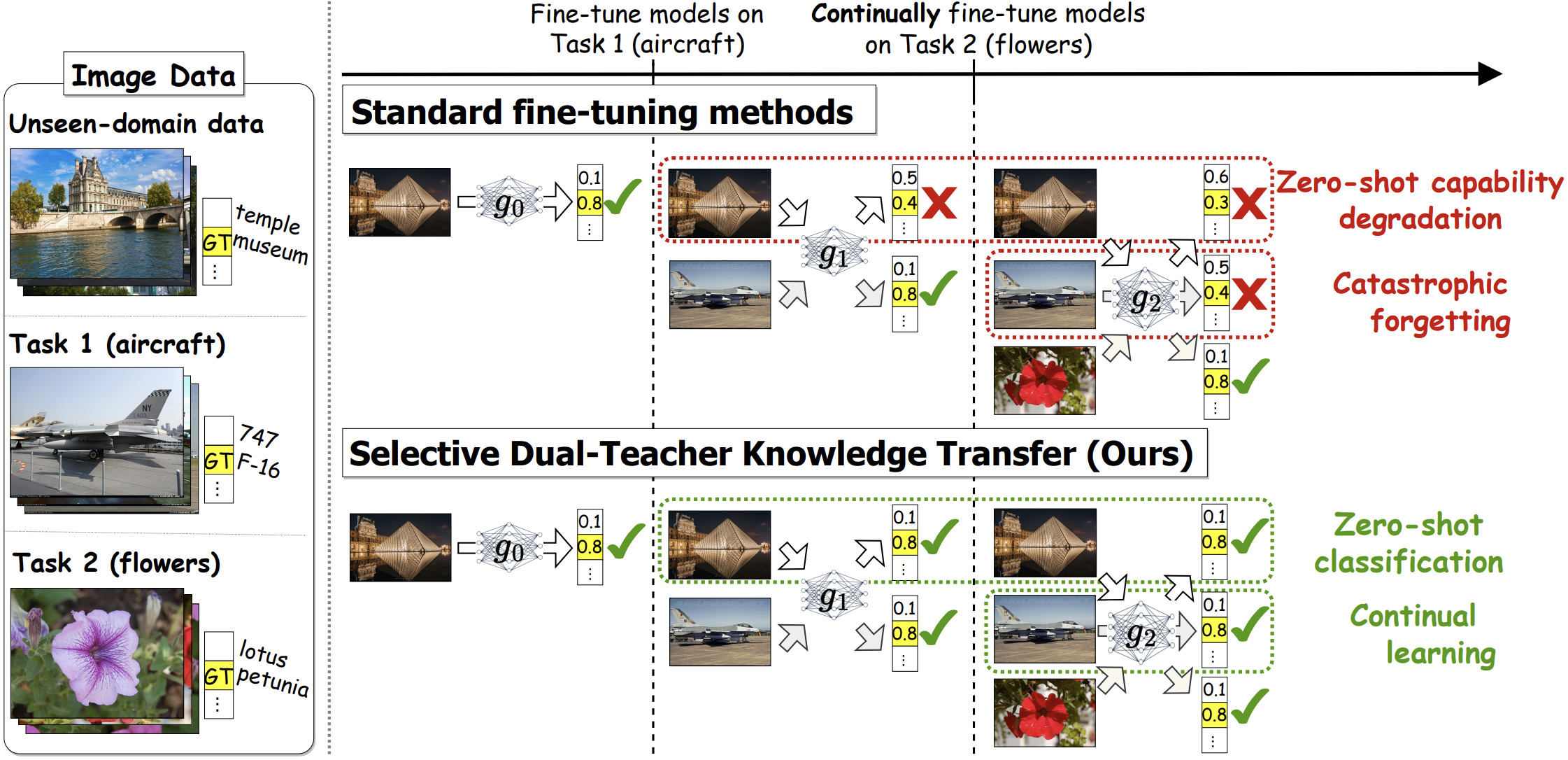

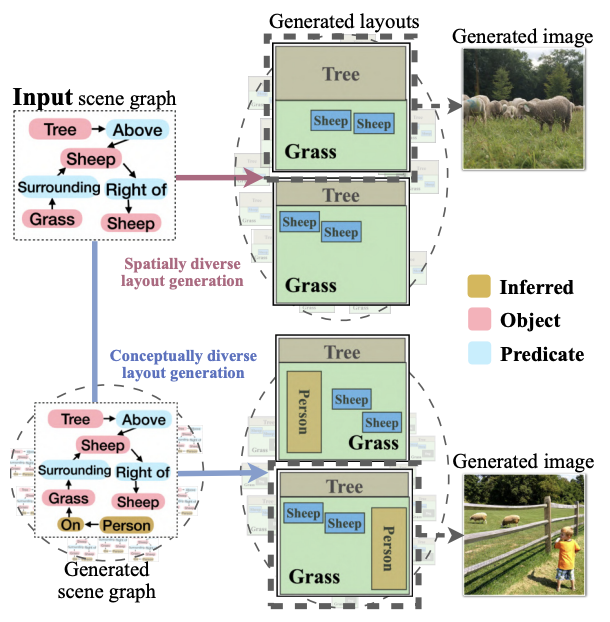

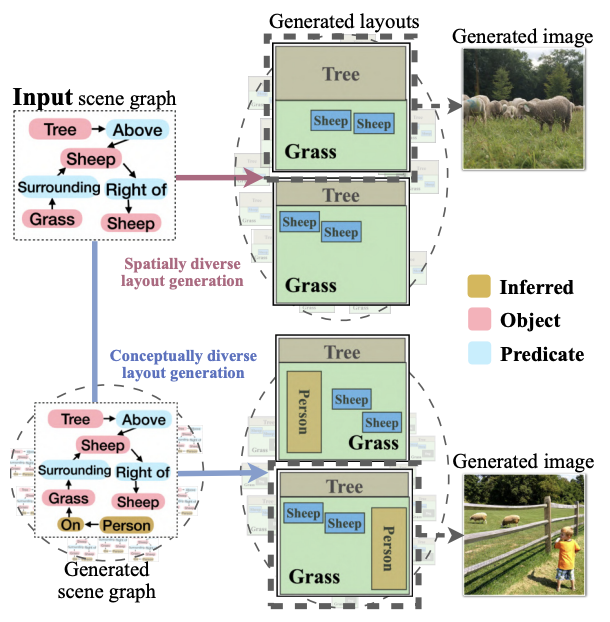

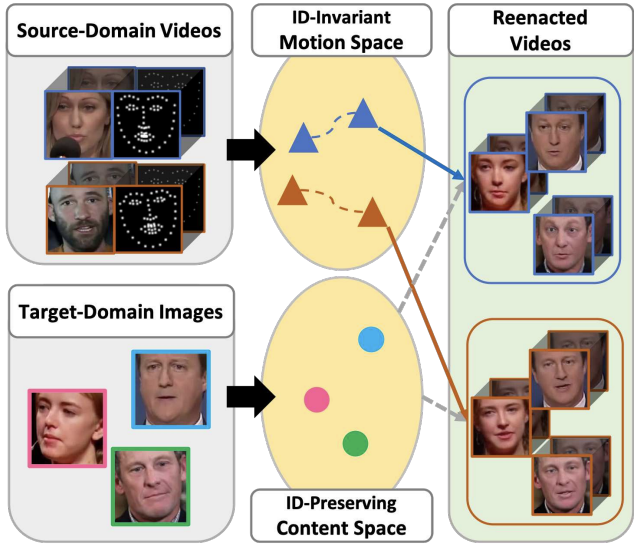

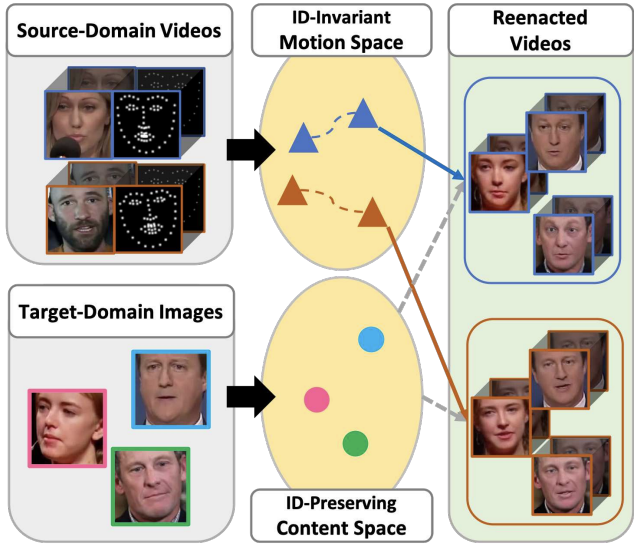

Chi-Pin Huang, Kai-Po Chang, Chung-Ting Tsai, Yung-Hsuan Lai, Fu-En Yang, Yu-Chiang Frank Wang European Conference on Computer Vision (ECCV), 2024 paper / arXiv / project / code |

|

Yu-Chu Yu, Chi-Pin Huang, Jr-Jen Chen, Kai-Po Chang, Yung-Hsuan Lai, Fu-En Yang, Yu-Chiang Frank Wang European Conference on Computer Vision (ECCV), 2024 paper / arXiv / project / code |

|

Kai-Po Chang, Chi-Pin Huang, Wei-Yuan Cheng, Fu-En Yang, Chien-Yi Wang, Yung-Hsuan Lai, Yu-Chiang Frank Wang International Conference on Learning Representations (ICLR), 2024 paper |

|

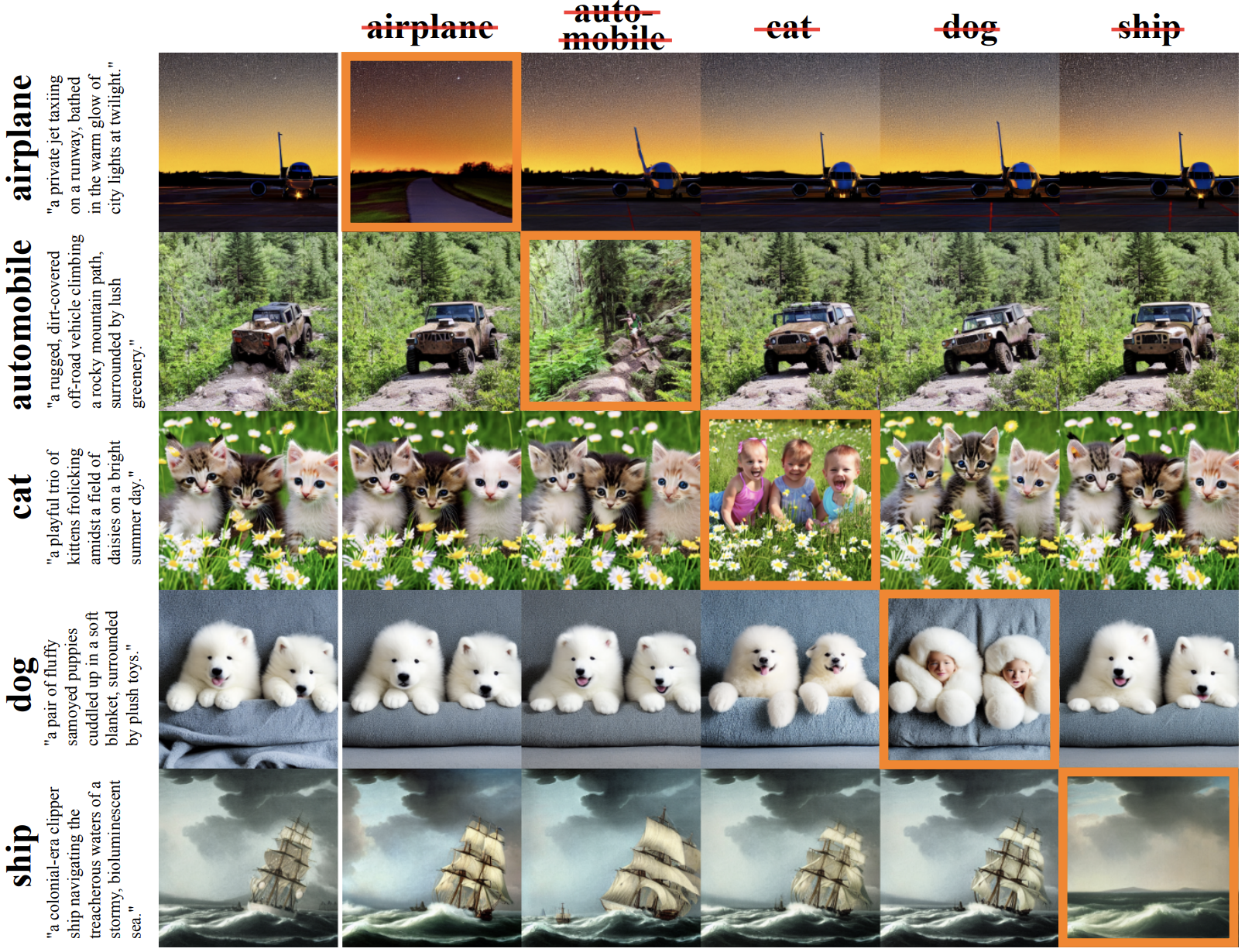

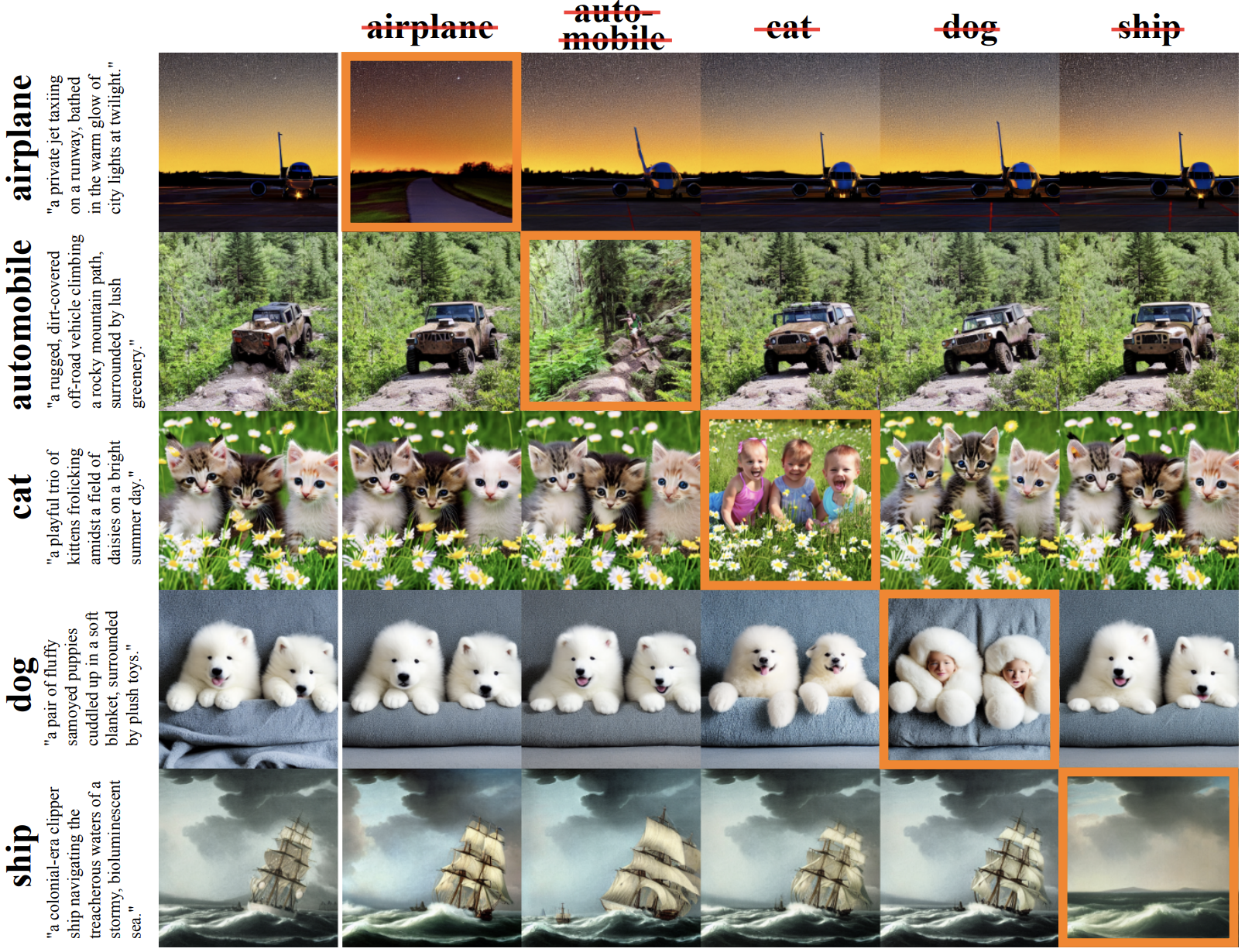

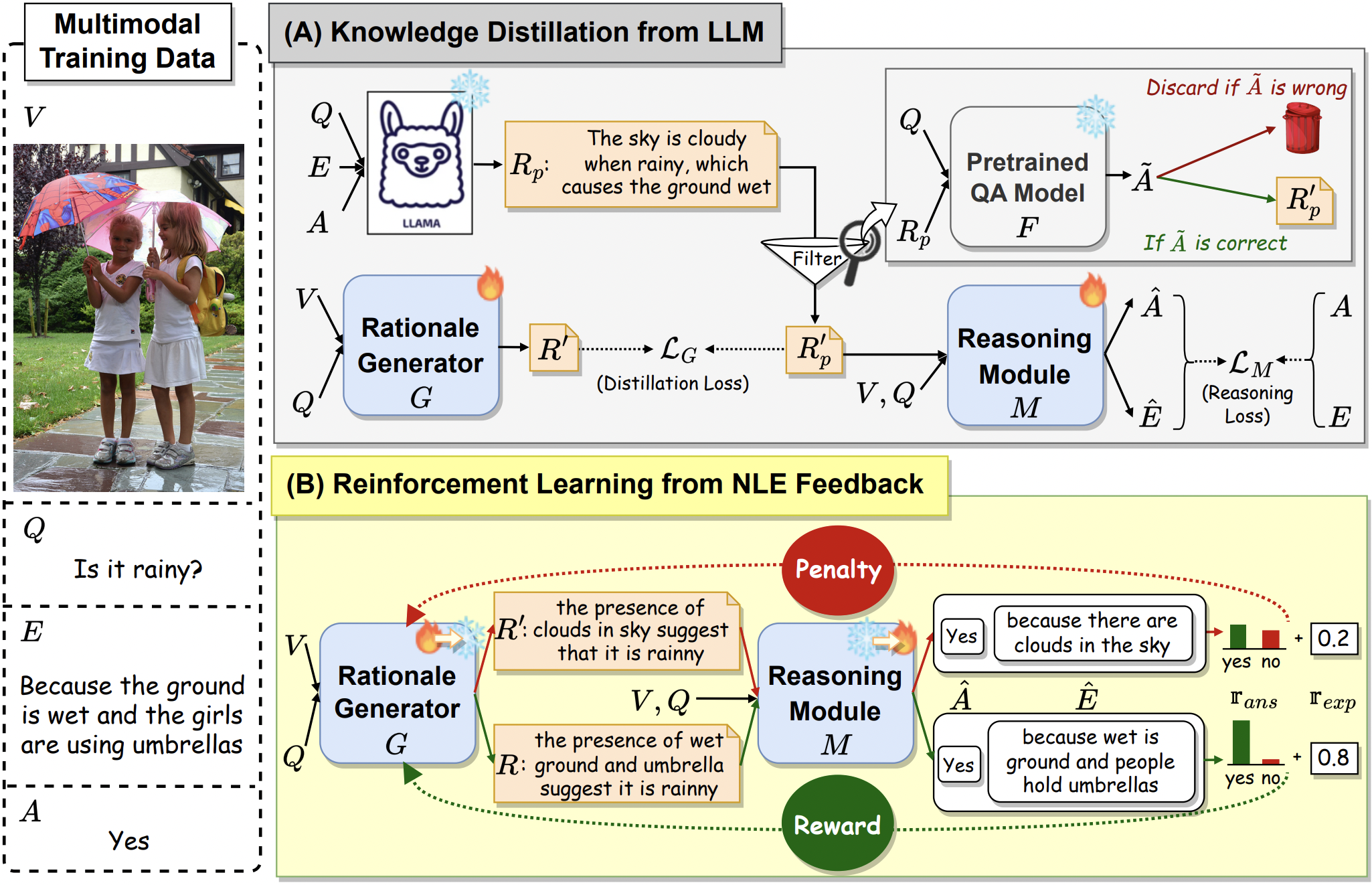

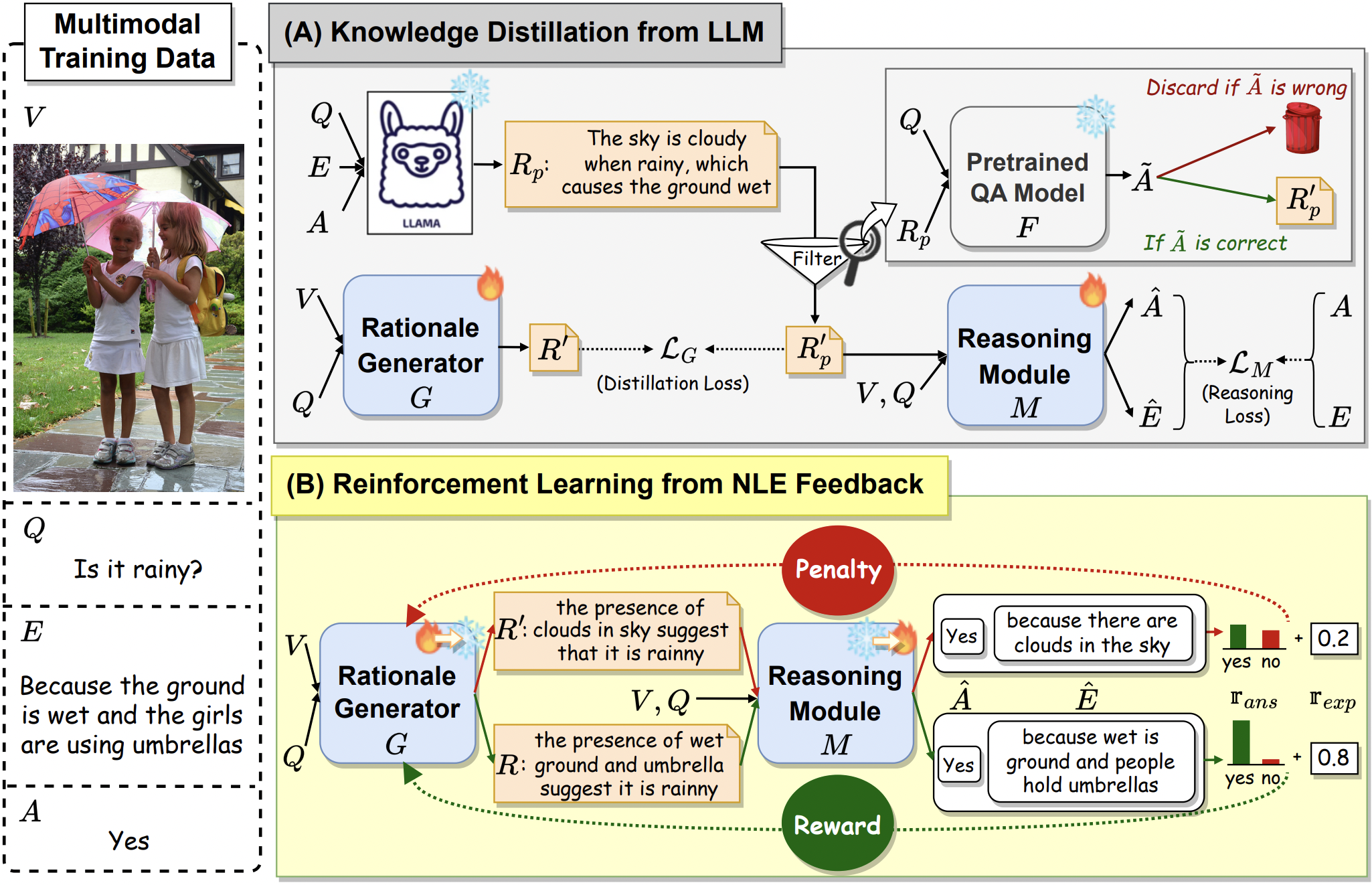

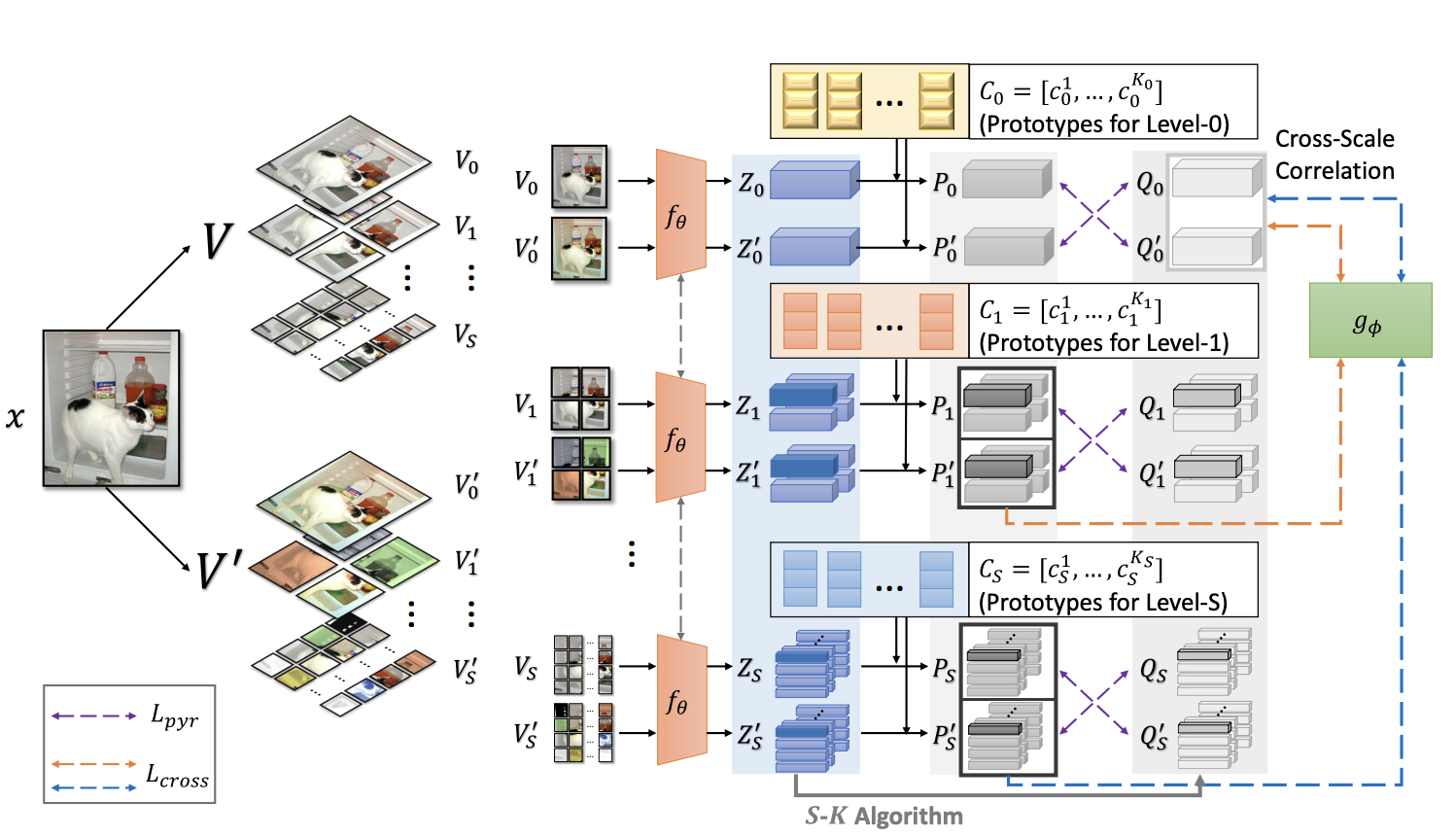

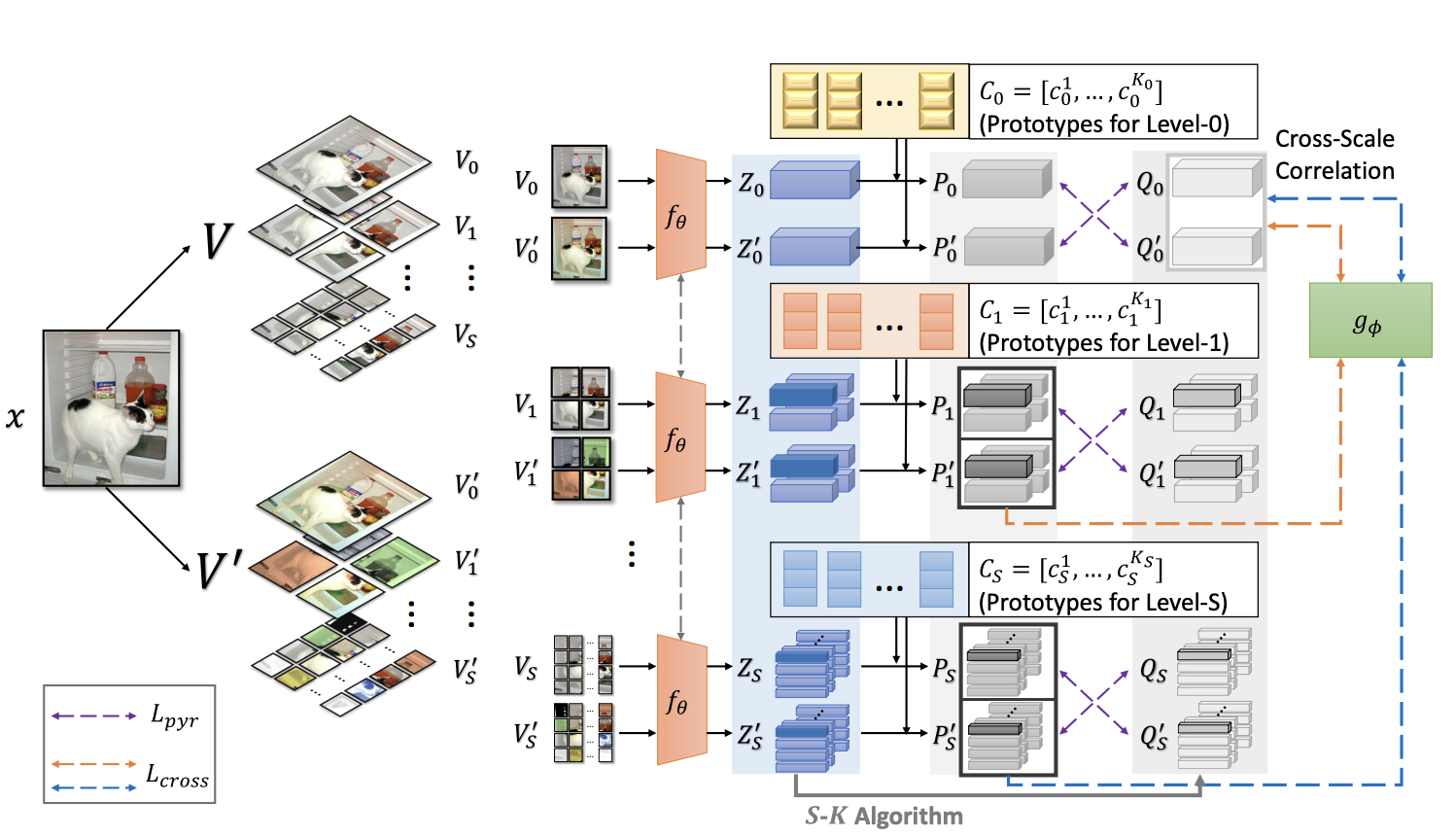

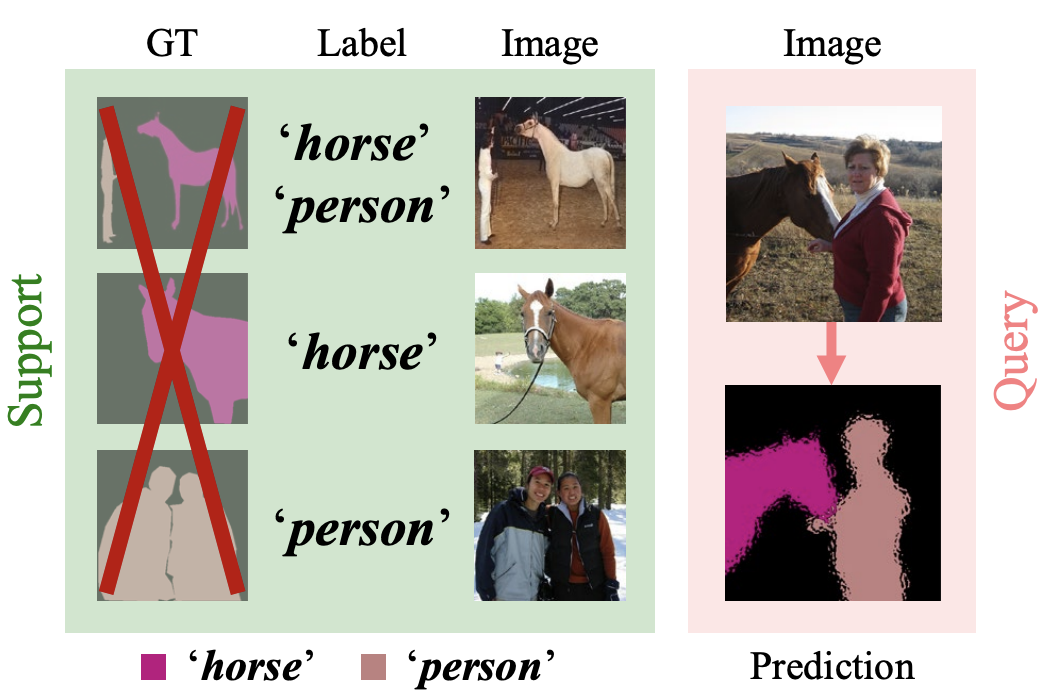

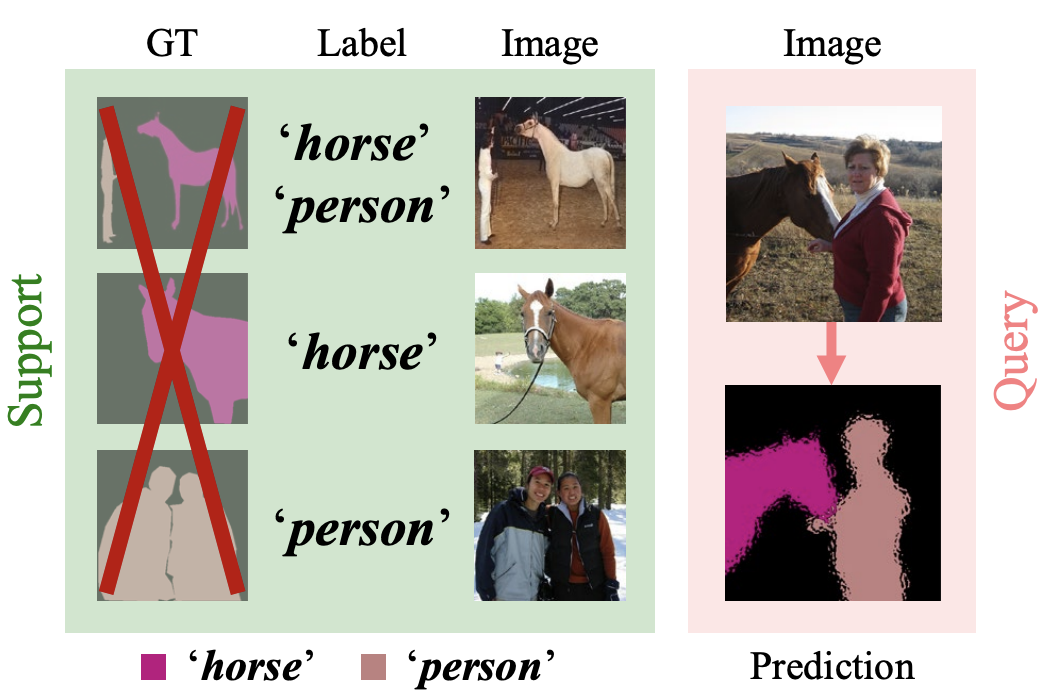

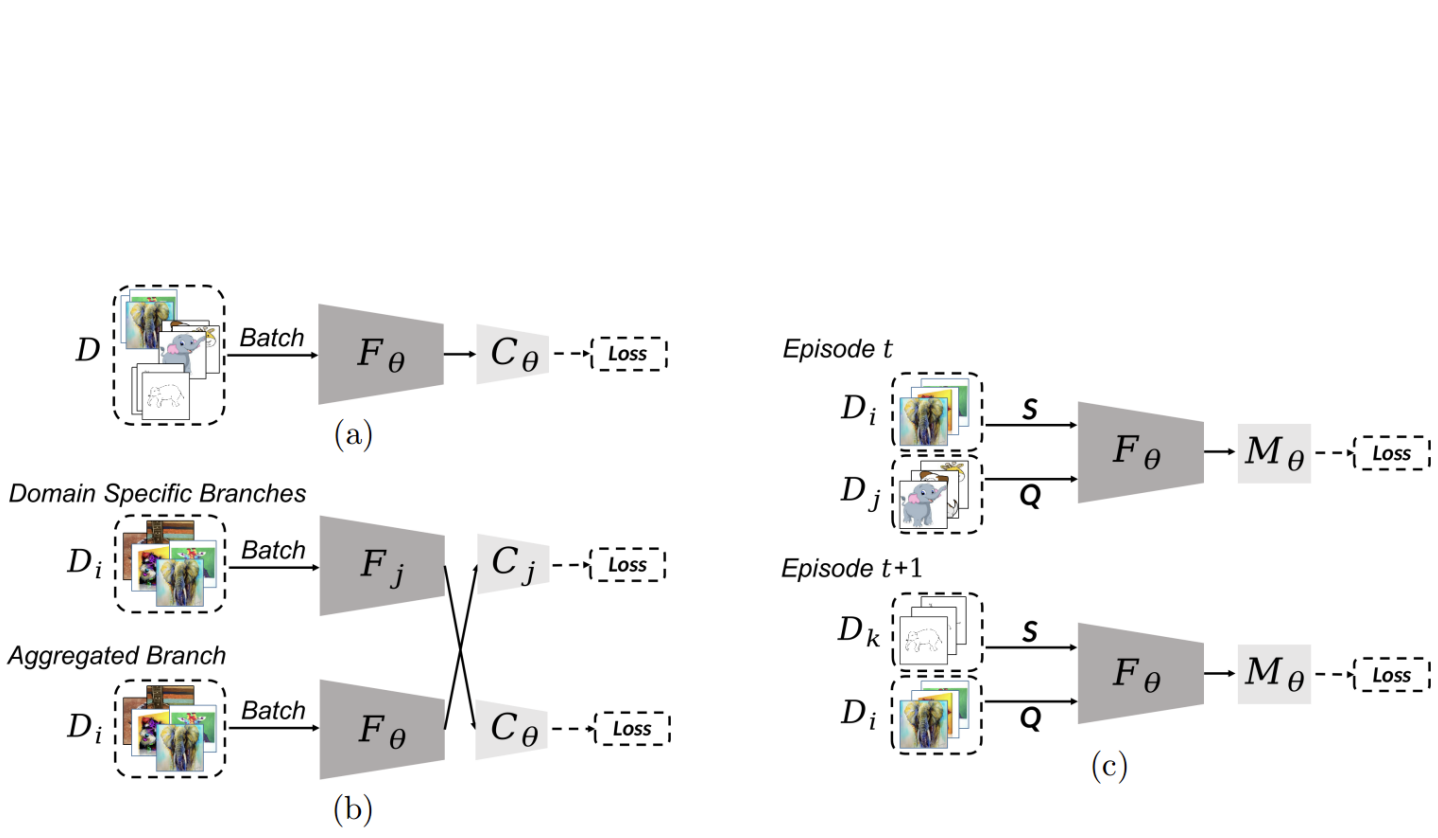

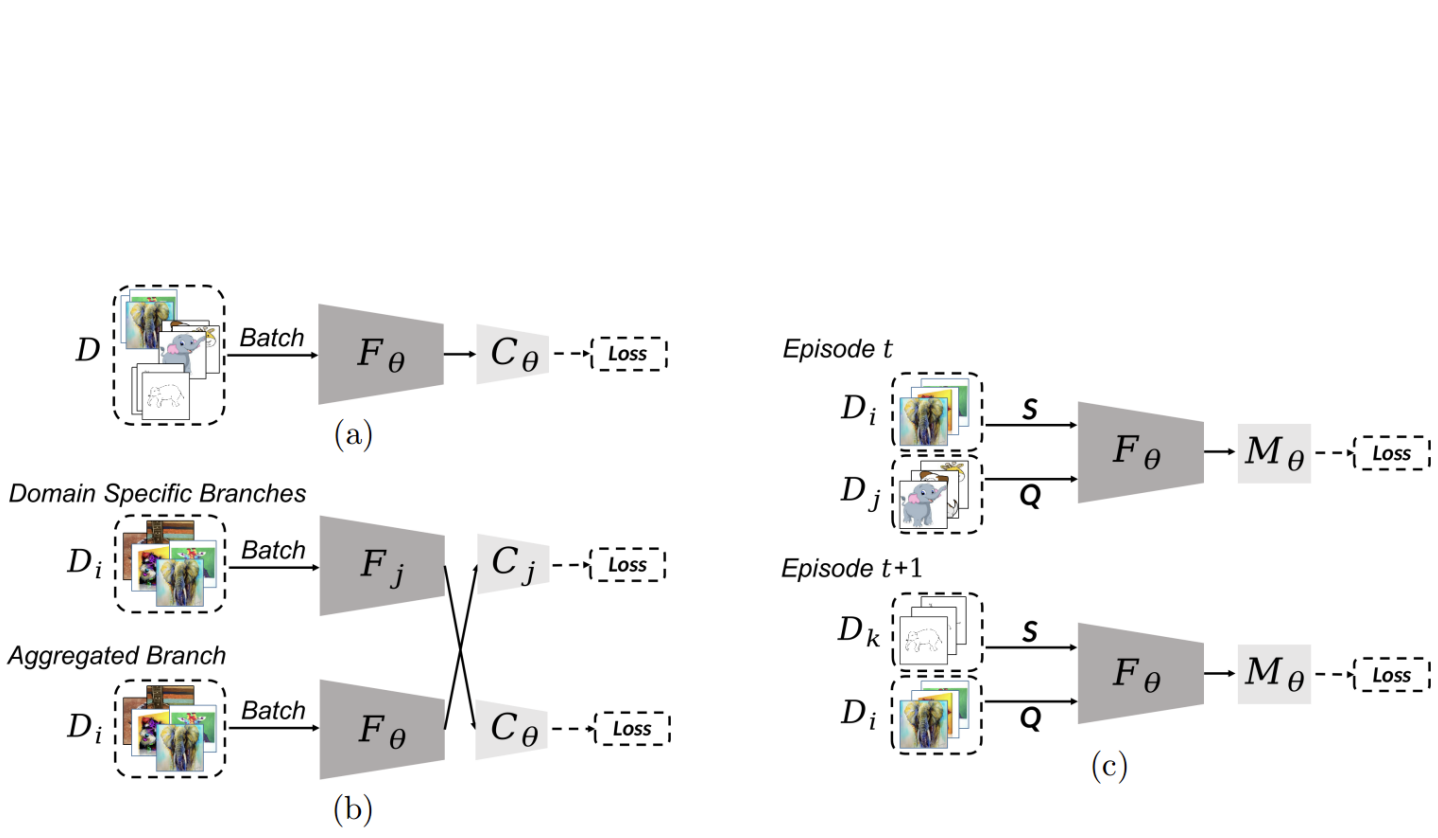

I-Jieh Liu, Ci-Siang Lin, Fu-En Yang, Yu-Chiang Frank Wang AAAI Conference on Artificial Intelligence (AAAI), 2024 paper / arXiv / webpage / code |

|

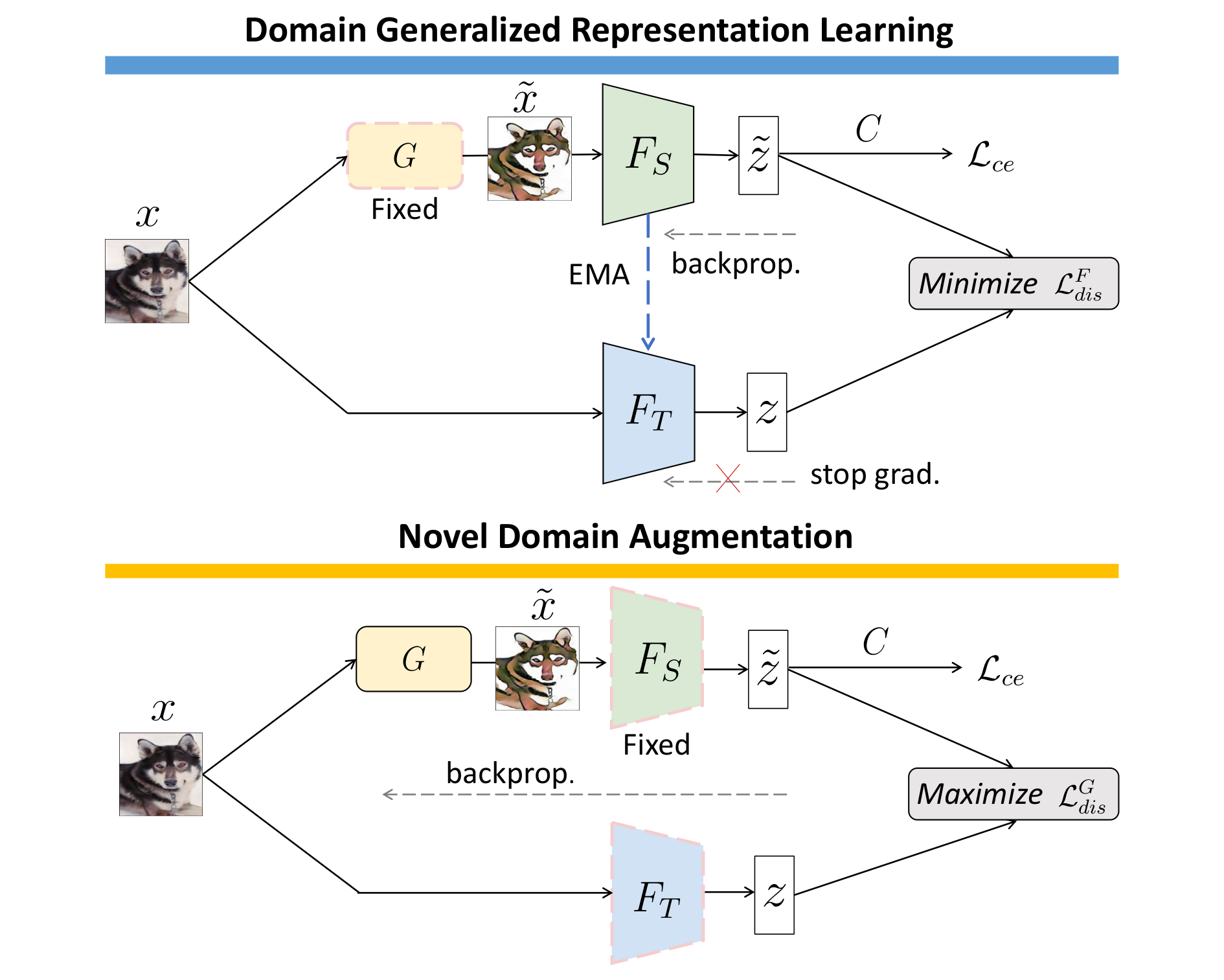

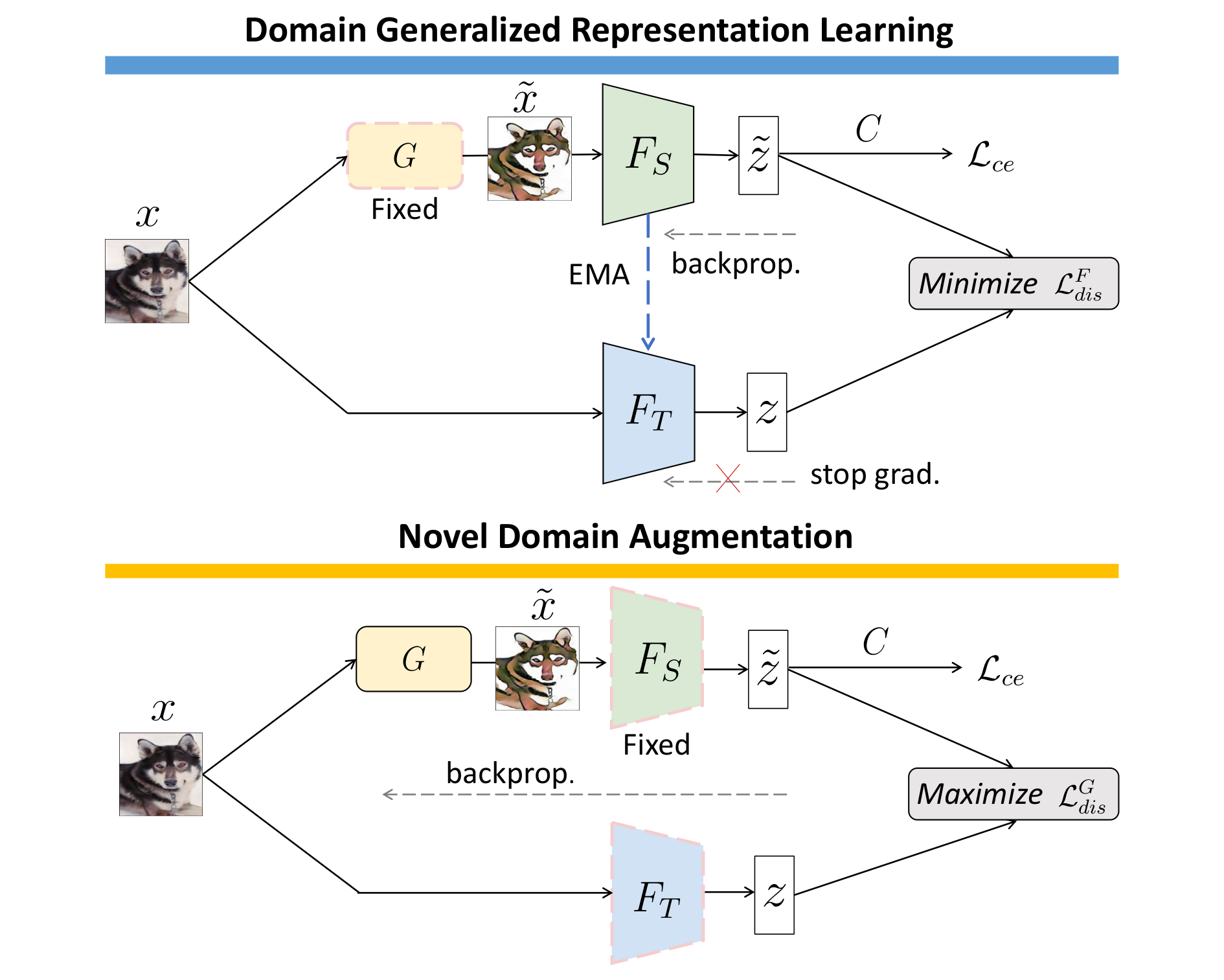

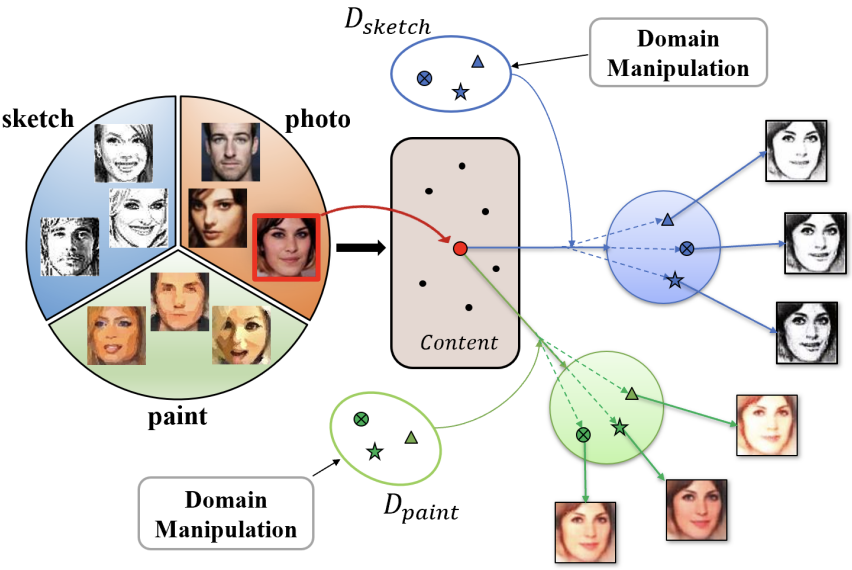

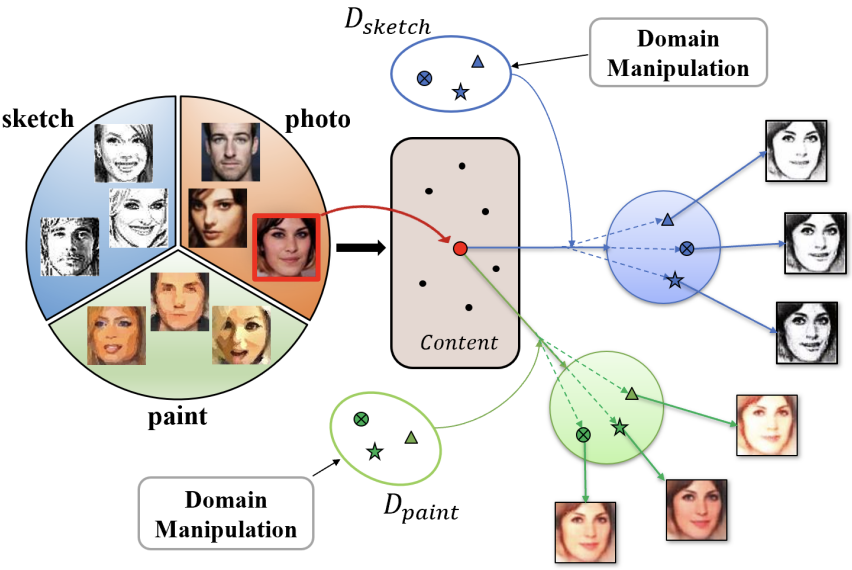

Fu-En Yang, Chien-Yi Wang, Yu-Chiang Frank Wang IEEE International Conference on Computer Vision (ICCV), 2023 paper / arXiv / poster |

|

Fu-En Yang, Yuan-Hao Lee, Chia-Ching Lin, Yu-Chiang Frank Wang International Journal of Computer Vision (IJCV), 2023 |

|

Cheng-Yen Hsieh, Chih-Jung Chang, Fu-En Yang, Yu-Chiang Frank Wang IEEE Winter Conference on Applications of Computer Vision (WACV), 2023 paper / arXiv / code |

|

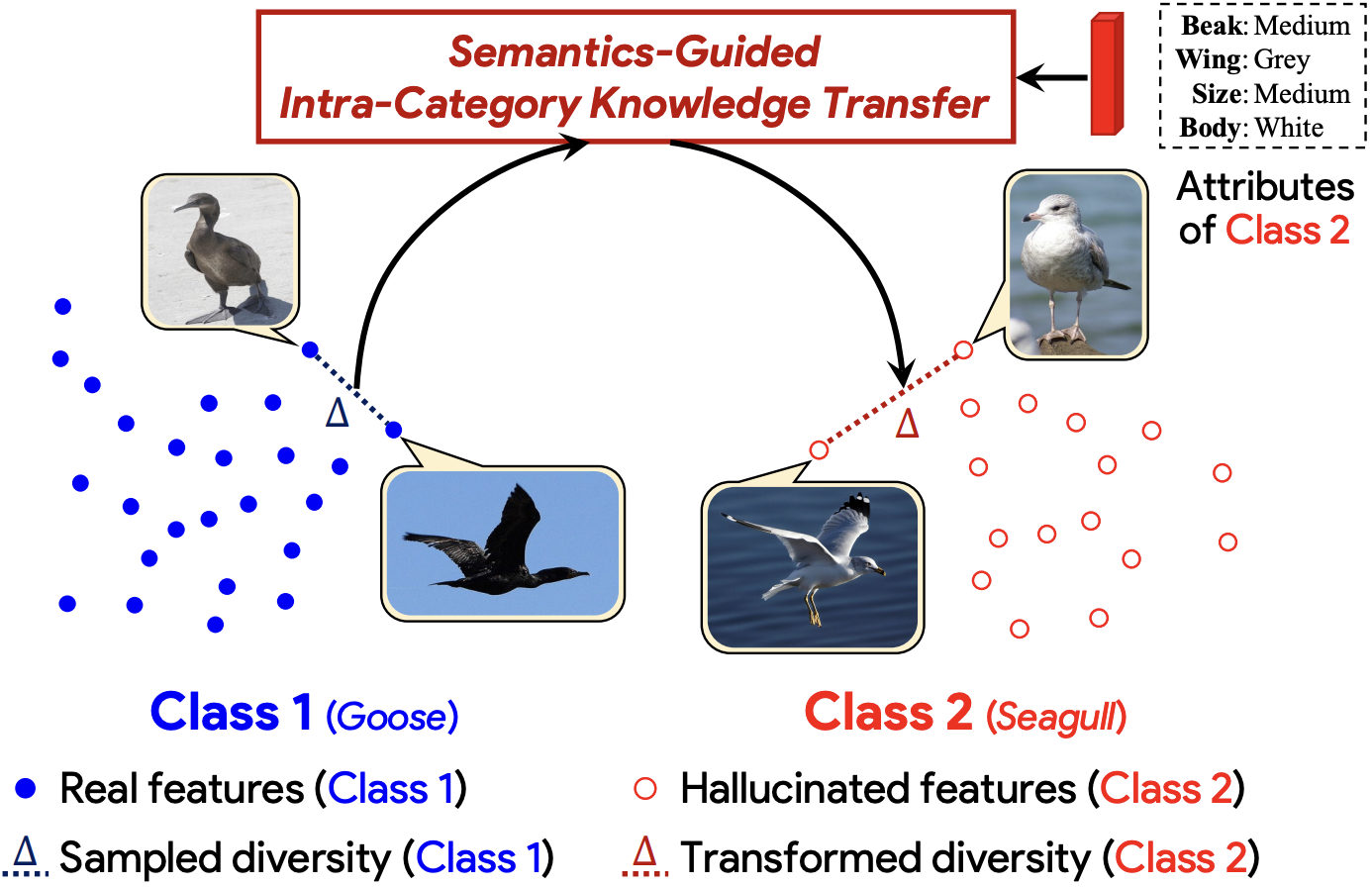

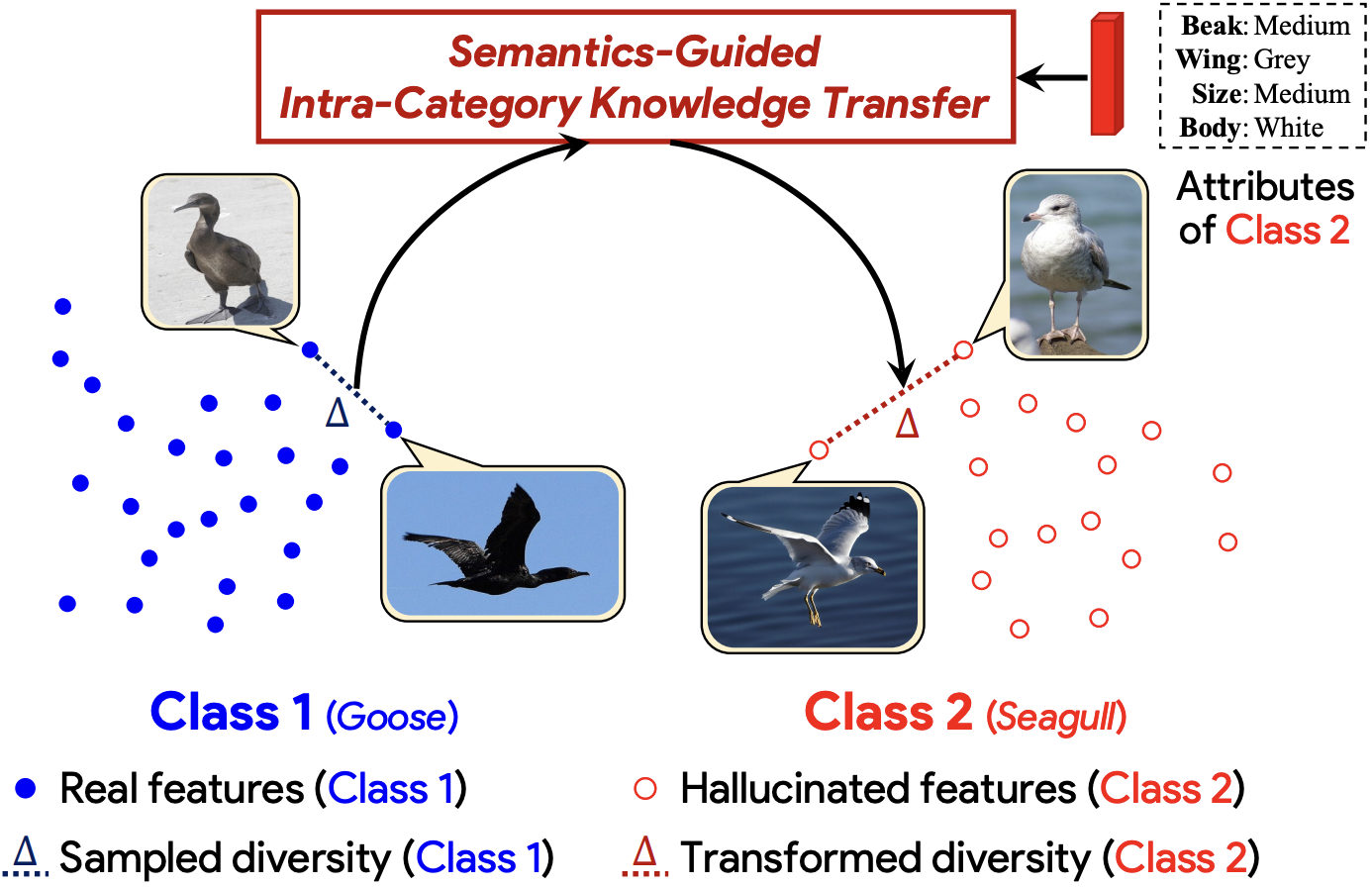

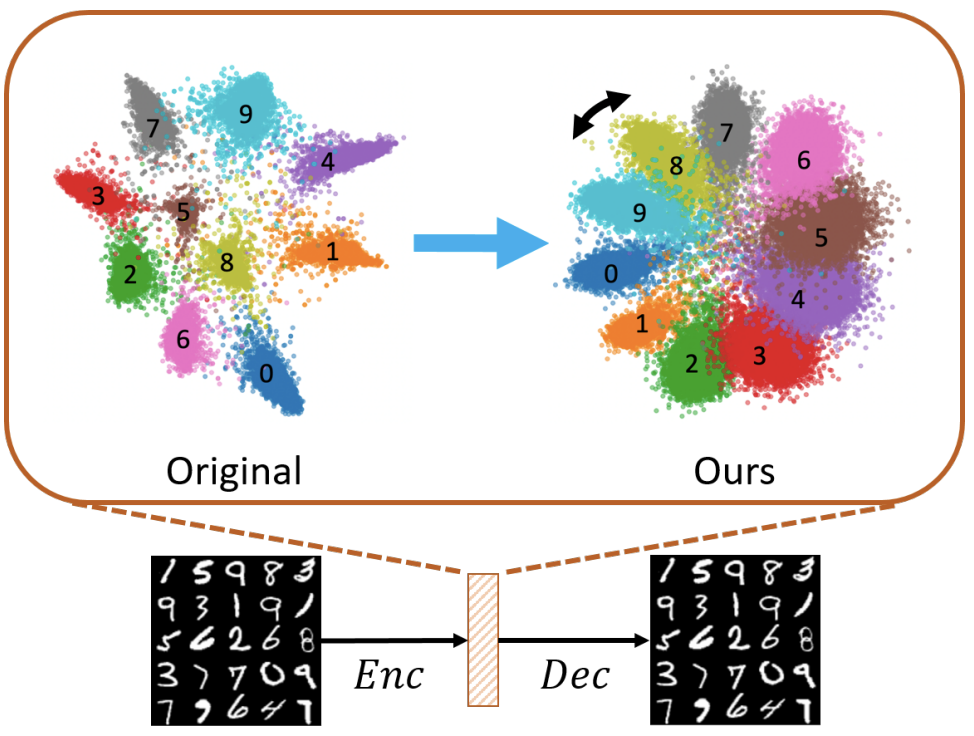

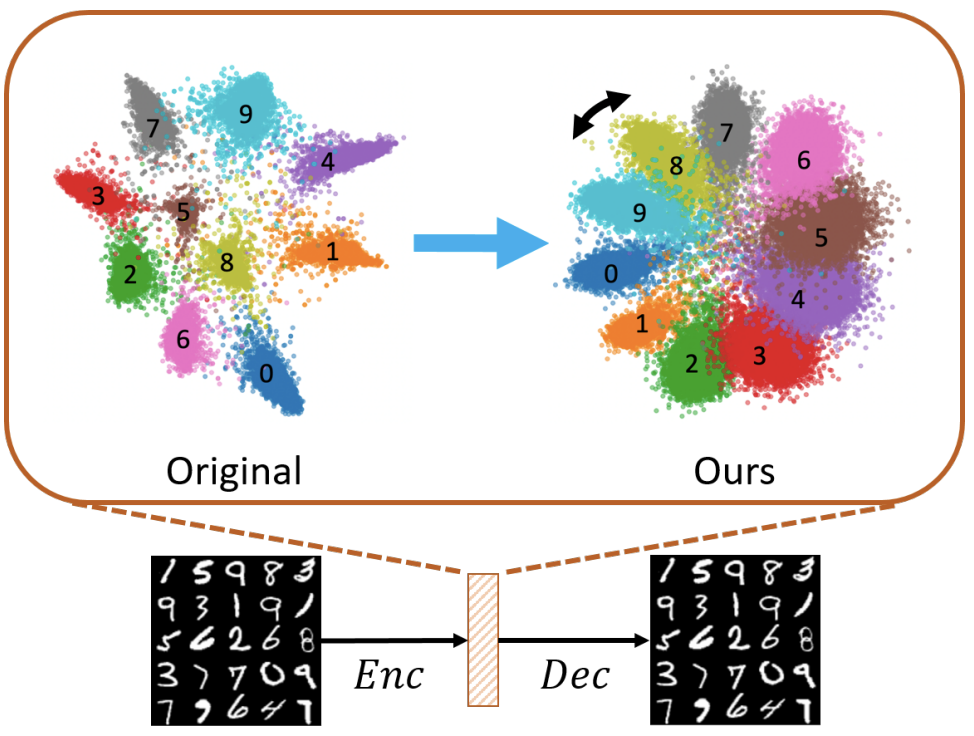

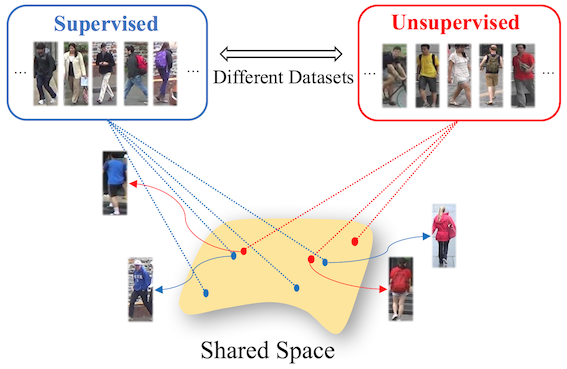

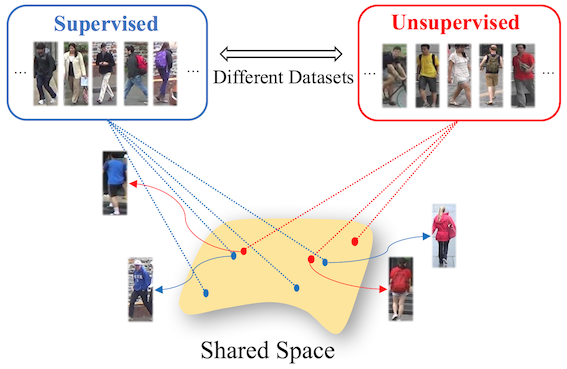

Fu-En Yang, Yuan-Chia Cheng, Zu-Yun Shiau, Yu-Chiang Frank Wang Advances in Neural Information Processing Systems (NeurIPS), 2021 (Spotlight Presentation) paper / OpenReview / video / slides / poster |

|

Yuan-Hao Lee, Fu-En Yang, Yu-Chiang Frank Wang IEEE Winter Conference on Applications of Computer Vision (WACV), 2022 paper / arXiv |

|

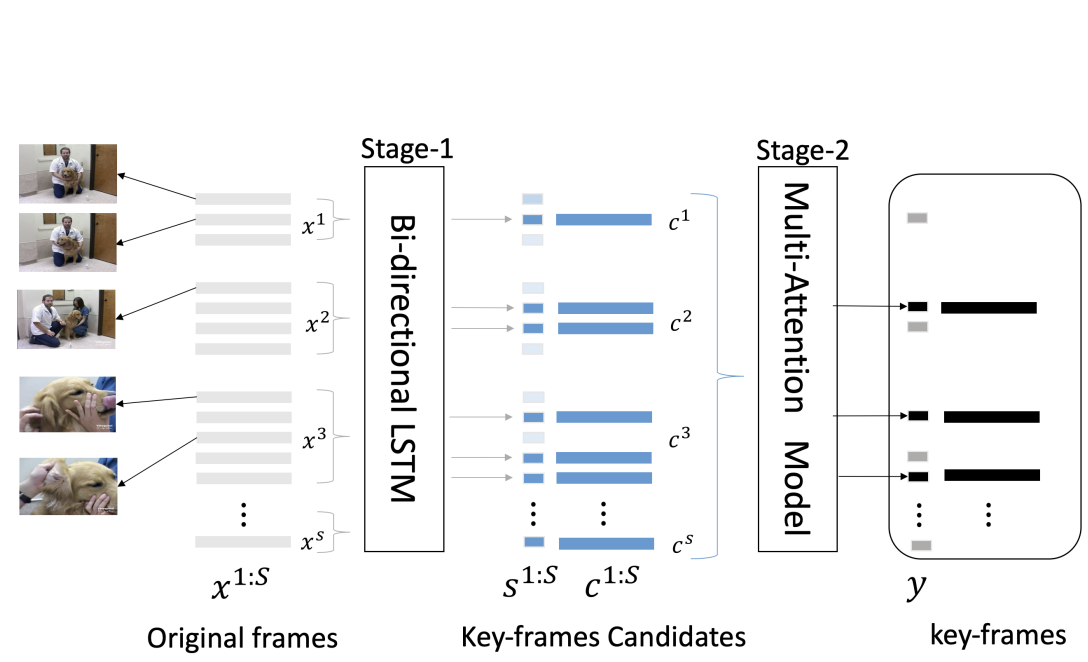

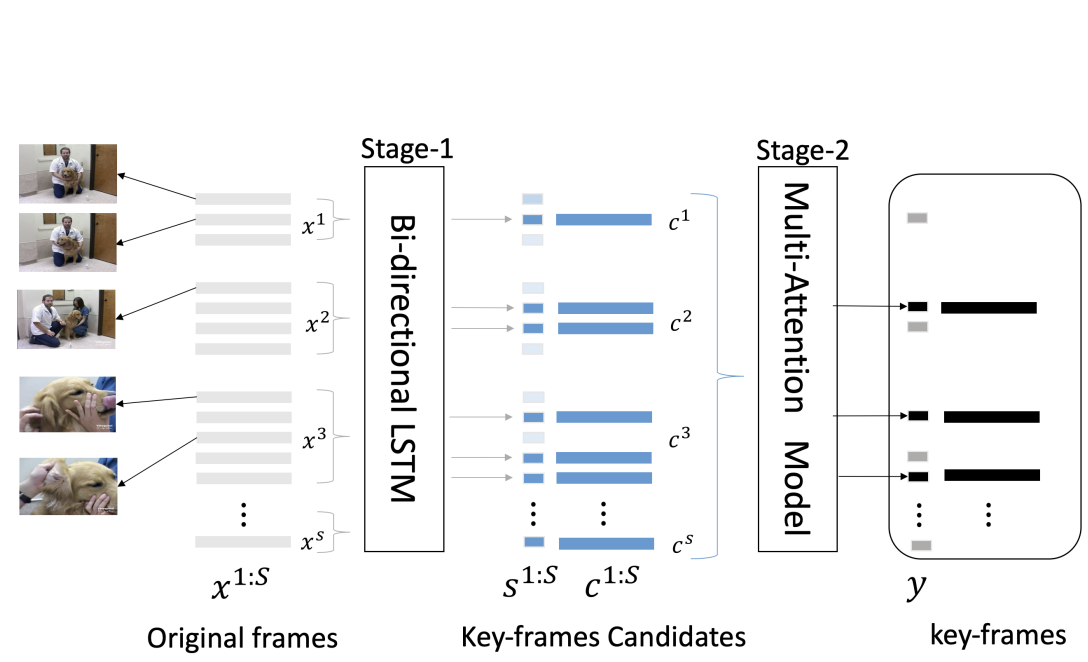

Cheng-Fu Yang*, Wan-Cyuan Fan*, Fu-En Yang, Yu-Chiang Frank Wang IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021 paper / code |

|

Yuan-Chia Cheng, Ci-Siang Lin, Fu-En Yang, Yu-Chiang Frank Wang IEEE International Conference on Image Processing (ICIP), 2021 paper / IEEE Xplore / arXiv |

|

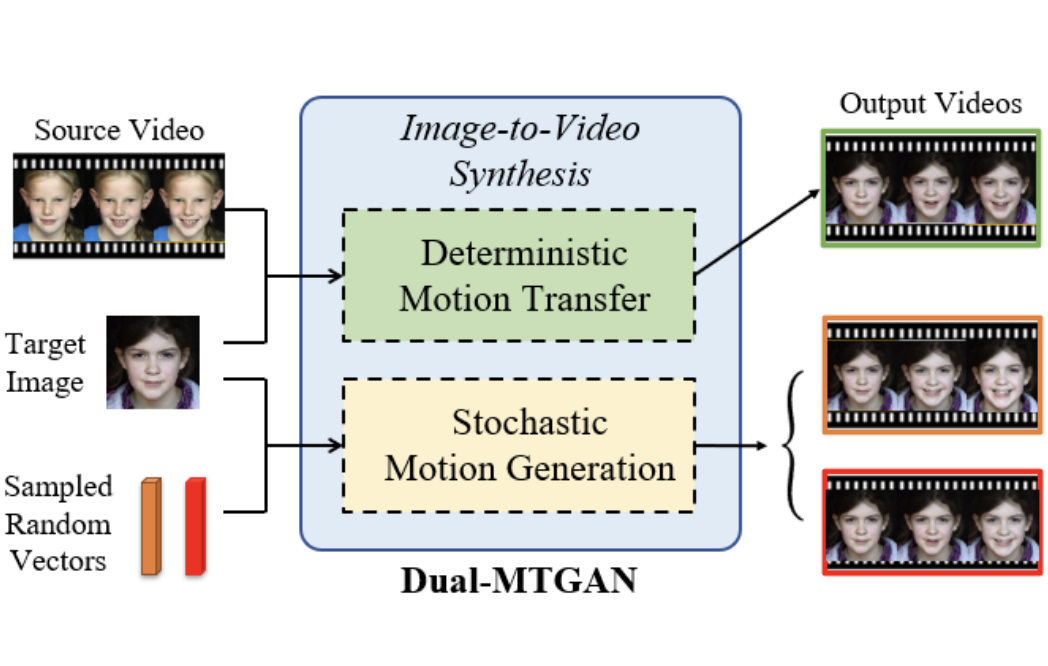

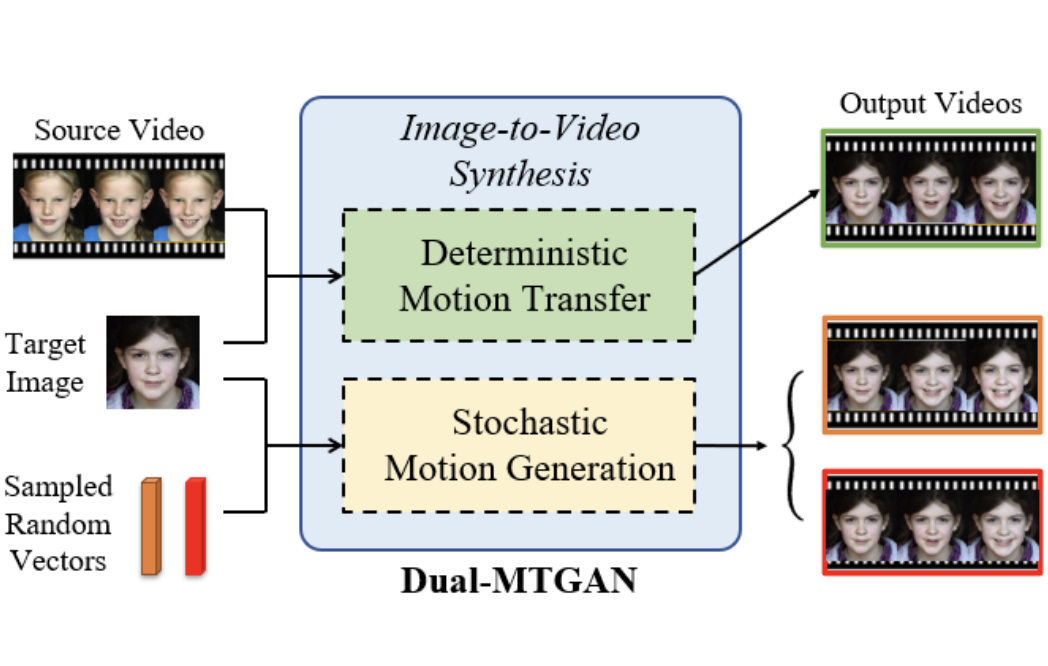

Po-Hsiang Huang, Fu-En Yang, Yu-Chiang Frank Wang IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020 paper / video |

|

Fu-En Yang*, Jing-Cheng Chang*, Yuan-Hao Lee, Yu-Chiang Frank Wang IEEE International Conference on Pattern Recognition (ICPR), 2020 paper / IEEE Xplore / arXiv / video / slides |

|

Jia-Wei Yan, Ci-Siang Lin, Fu-En Yang, Yu-Jhe Li, Yu-Chiang Frank Wang IEEE International Conference on Pattern Recognition (ICPR), 2020 paper / IEEE Xplore / arXiv |

|

Fu-En Yang*, Jing-Cheng Chang*, Chung-Chi Tsai, Yu-Chiang Frank Wang IEEE Transactions on Image Processing (TIP), 2020 paper / IEEE Xplore |

|

Yen-Ting Liu, Yu-Jhe Li, Fu-En Yang, Shang-Fu Chen, Yu-Chiang Frank Wang IEEE International Conference on Image Processing (ICIP), 2019 IEEE Xplore |

|

Yu-Jhe Li, Fu-En Yang, Yen-Cheng Liu, Yu-Ying Yeh, Xiaofei Du, Yu-Chiang Frank Wang IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2018 paper / arXiv / code |

|

|

|

|

The template is designed and shared by Dr. Jon Barron. |